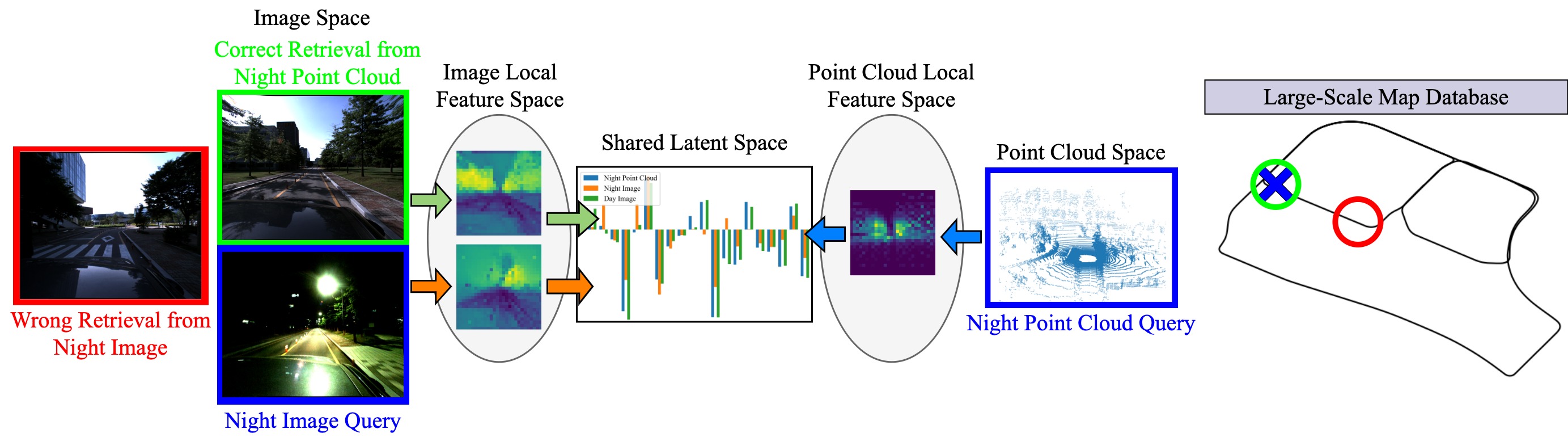

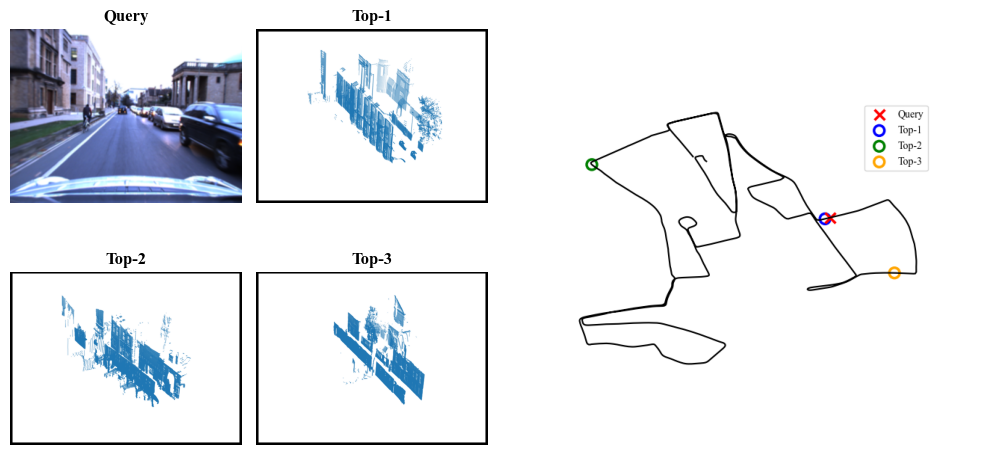

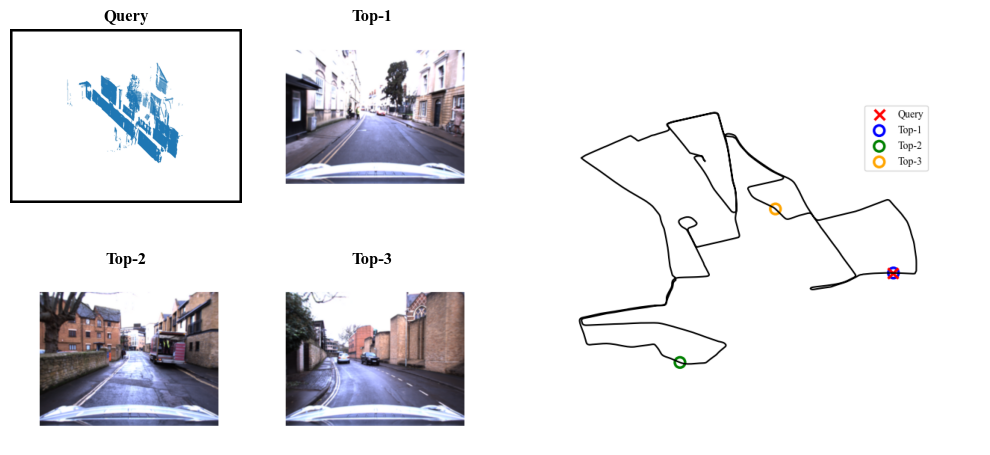

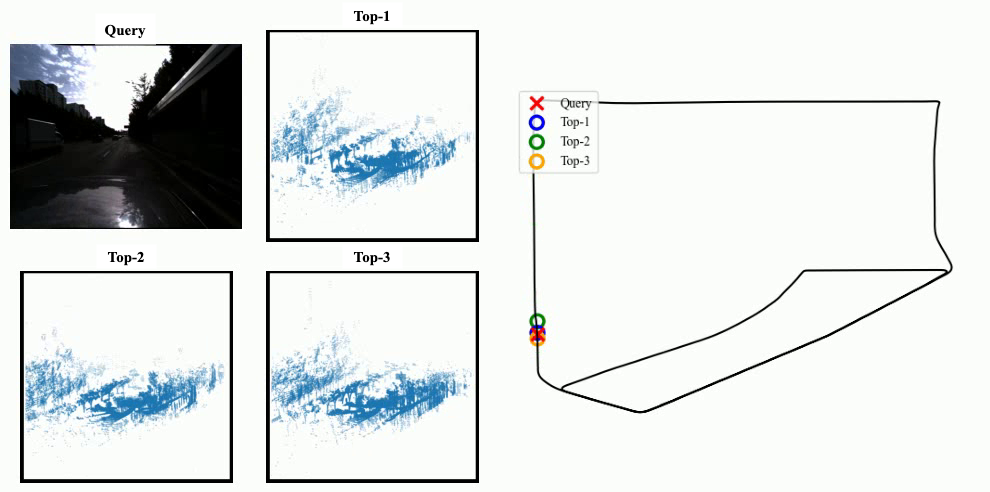

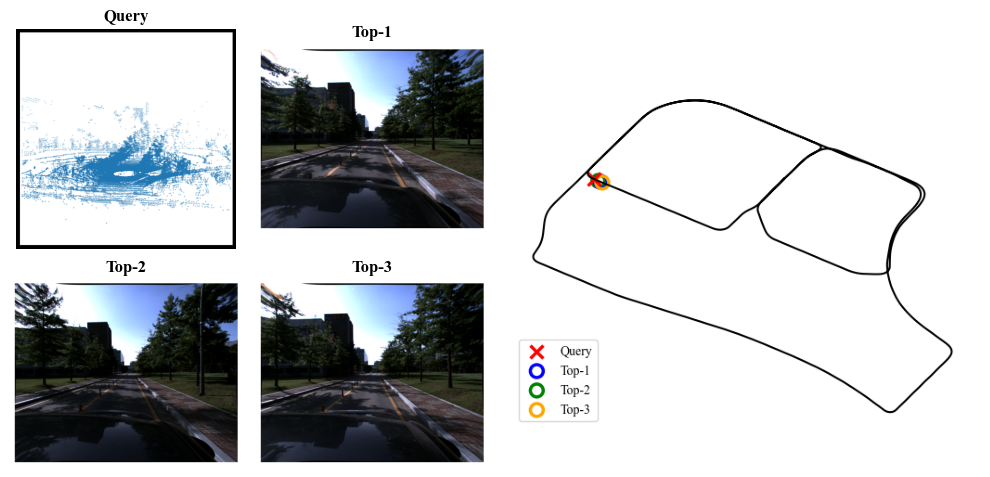

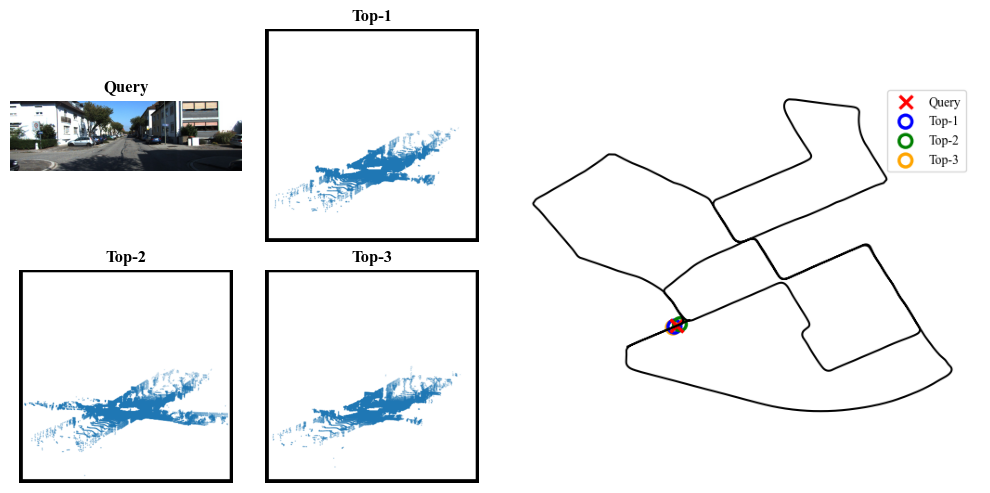

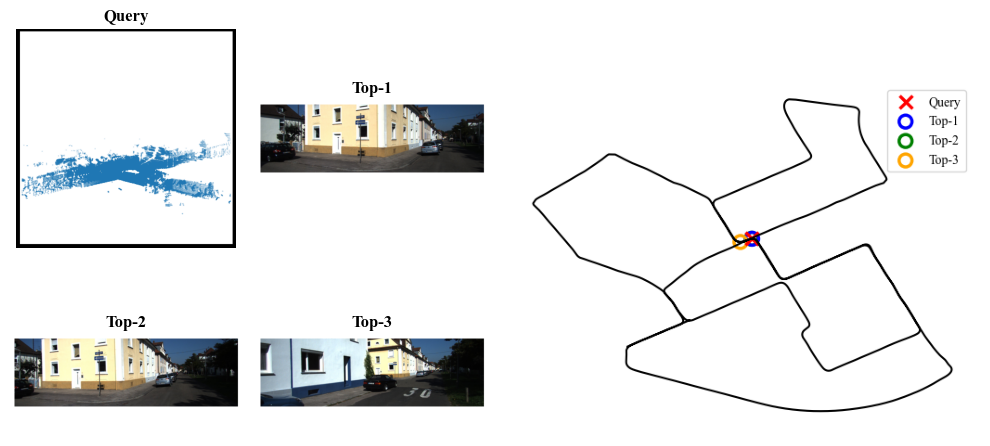

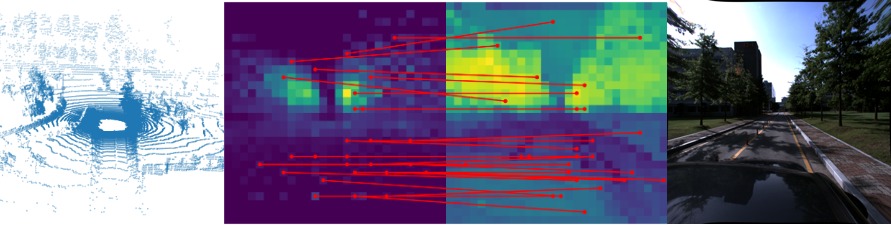

Recent works on the global place recognition treat the task as a retrieval problem, where an off-the-shelf global descriptor is commonly designed in image-based and LiDAR-based modalities. However, it is non-trivial to perform accurate image-LiDAR global place recognition since extracting consistent and robust global descriptors from different domains (2D images and 3D point clouds) is challenging. To address this issue, we propose a novel Voxel-Cross-Pixel (VXP) approach, which establishes voxel and pixel correspondences in a self-supervised manner and brings them into a shared feature space. Specifically, VXP is trained in a two-stage manner that first explicitly exploits local feature correspondences and enforces similarity of global descriptors. Extensive experiments on the three benchmarks (Oxford RobotCar, ViViD++ and KITTI) demonstrate our method surpasses the state-of-the-art cross-modal retrieval by a large margin. The code will be publicly available.

@article{li2024vxp,

title={VXP: Voxel-Cross-Pixel Large-scale Image-LiDAR Place Recognition},

author={Li, Yun-Jin and Gladkova, Mariia and Xia, Yan and Wang, Rui and Cremers, Daniel},

journal={arXiv preprint arXiv:2403.14594},

year={2024}

}